The landscape of Artificial Intelligence (AI) is rapidly evolving, with decentralized AI inference emerging as a pivotal aspect of this technological revolution. At the heart of decentralized AI lies the network, an indispensable component that ensures the seamless running of AI models on remote devices. This decentralization paradigm seeks to harness the power of spare GPUs across the globe, enabling individuals to contribute to AI inference processes. However, this introduces a complex challenge in networking, necessitating a secure peer-to-peer (P2P) communication solution between the user device and the inference provider. The networking stack, in this context, demands robust connectivity, strict permission protocols, and uncompromised privacy.

Networking Challenges in Decentralized AI

Decentralized AI inference networking requires:

- Connectivity: Ensuring peer-to-peer connections under any network condition.

- Permission: Allowing only authorized users access to the inference provider.

- Privacy: Ensuring that communication remains confidential, inaccessible to any third parties, including relayers.

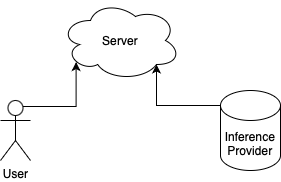

Traditionally, addressing these requirements would involve a centralized approach, constructing a relay service equipped with authentication and end-to-end encryption. However, this method significantly increases system complexity and development overhead for decentralized inference platforms.

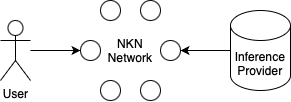

Introducing the NKN Network Solution

The NKN (New Kind of Network) network emerges as a groundbreaking solution, enabling decentralized peer-to-peer communication that caters specifically to the needs of decentralized AI inference. It offers:

- Seamless operation in any network condition.

- Built-in public key identity for straightforward permission management.

- End-to-end encryption for all communications, safeguarding privacy.

Remarkably, the NKN network is free, open-source, and decentralized, supported by a robust community. With over 60,000 nodes and handling around 20 billion messages daily, it stands as a testament to its efficacy and reliability.

Utilizing NKN Network for Decentralized Inference

Leveraging the NKN network for decentralized AI inference simplifies the networking aspect significantly. It provides high-level tools like nkn-tunnel and nConnect, facilitating transparent end-to-end TCP tunneling. These tools are user-friendly, with nkn-tunnel focusing on exposing a target port of the destination machine, and nConnect offering access to the entire network of the destination machine.

For developers seeking more control and additional features, the NKN SDKs, such as nkn-sdk-go and nkn-sdk-js, present a more versatile option. These SDKs enable message-based communication and offer finer control over the networking functionalities.

Conclusion

The NKN network represents a leap forward in enabling decentralized AI inference, addressing the critical challenges of connectivity, permission, and privacy through its innovative peer-to-peer communication framework. By lowering the barriers to entry and simplifying the networking complexities, NKN paves the way for a more inclusive, efficient, and secure decentralized AI landscape. As the community and technology continue to grow, the potential for decentralized AI inference using the NKN network is boundless, heralding a new era of AI development and deployment.