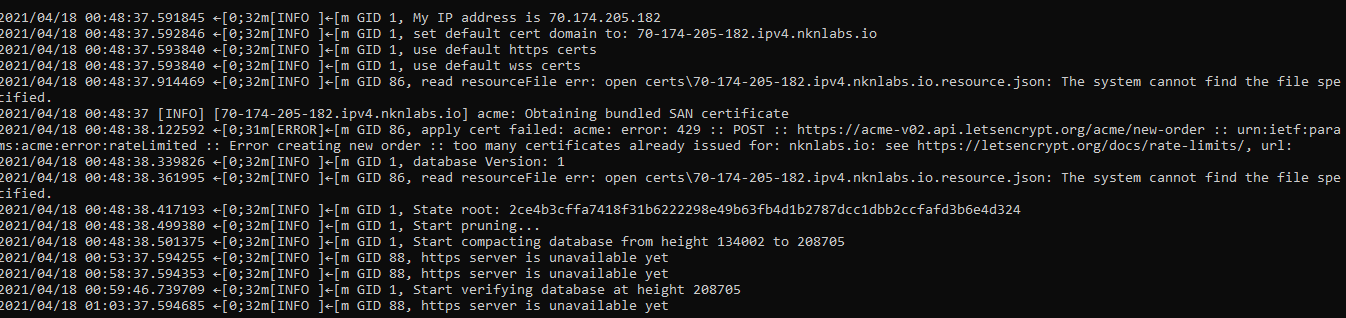

This solution solves the problem of the node that does not leave the status of “pruning”, this problem is related to the database.

With this tutorial, you can download the latest database manually.

1- Go to google cloud

2- Compute Engine > VM instances

3- Click on SSH to open a dialog window with your server

4- When the command line window opens, type:

sudo su

5- Enter the command below to return the previous folders:

cd …

6- Enter the command below to go directly to the folder intended to make the change

cd nkn/nkn-commercial/services/nkn-node/

7- The command below will delete the current database:

rm -r ChainDB

8- The command below will download the new database (this may take a few minutes):

9- After the download is finished, use the command below to unzip the new database, it will be automatically unzipped into a folder called chainDB equal to the previous one, however, with the new database:

tar -vzxf ChainDB_pruned_latest.tar.gz

10- After unzipping, you can delete the ChainDB_pruned_latest.tar.gz file to avoid taking up space on the server:

rm -r ChainDB_pruned_latest.tar.gz

11- Okay, now just restart using the reboot command

reboot